Author: Jedrzej Rybicki (FZJ)

Contributors: Anna Queralt (BSC), Maria Petrova-El Sayed (FZJ), Fabrizio Marozzo (DtoK Lab), François Exertier (Atos), Jorge Ejarque (BSC)

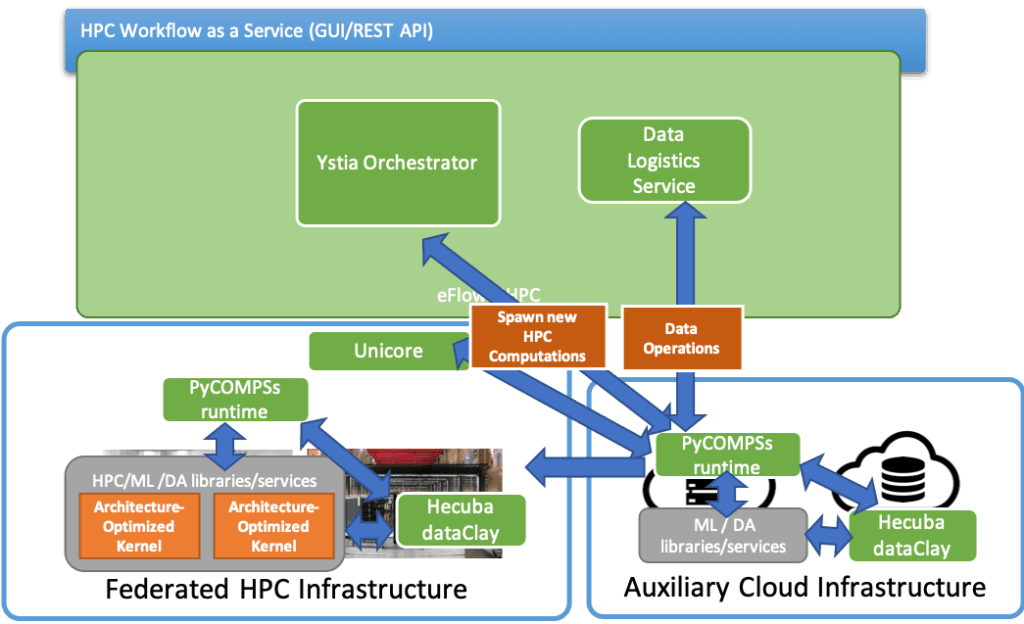

The eFlows4HPC project will implement a European workflow platform enabling development and execution of applications that leverage HPC, data analytics, and artificial intelligence. One of the key success factors for this project is the quick, easy, and efficient deployment of the created solution across the heterogeneous resources. Below, we will show how this multifaceted challenge is addressed.

Firstly, both supporting services as well as the required scientific codes need to be made available and executable on the target resources. To this end, eFlows4HPC is looking towards modern container solutions to be combined and adapted to the project orchestration solution. Beyond the ease of deployment, the application of containers for scientific code strongly contributes to reproducibility of the research.

The containers created in the project will be made available in a software catalog, allowing for the reuse not only within but also outside of the project. In case of the eFlows4HPC services, the flexibility of the deployment with containers will be further amplified by the flexibility of the underlying resources of the Helmholtz Data Federation Cloud. It will enable a solution accommodating the changes in the user requirements, creation of on-demand instances of popular services, as well as expected fault-tolerance.

The process of deployment also needs to safe-guard the availability of the relevant data, in the format required by the particular workflow. To this end, eFlows4HPC will establish a Data Logistics Service and employ modern data management and storage solutions: dataClay, Hecuba, and Ophidia.

The envisioned data movement will not only deploy the required data sets on the processing resources. The movements to register the created artefacts, i.e., computation results with the external repositories, will also be supported. This, together with the formalization of the data movements, is crucial to implement the vision of FAIR (Findible, Accessible, Interoperable, Reusable) scientific endeavours.

Workflow deployment and service operations across heterogeneous resources

Lastly, a cross-cutting concern of the deployment is its optimisation also in terms of energy efficiency. Here again, the above-mentioned storage solutions of dataClay, Hecuba, and Ophidia offer help by enabling efficient in-storage and in-memory processing. Furthermore, a more holistic view of the workflow executions will be established and optimised by reducing the data movements, use of specialised resources, and correct setting of the execution parameters.

eFlows4HPC plays an important role as an intermediator, mapping the needs of the scientific users on the technical solutions created in the project. The needs can only be satisfied if the created solutions are effectively deployed.